1. NerdCalc

In the following, I want to give you some background information, why I created fp64lib and how it evolved.

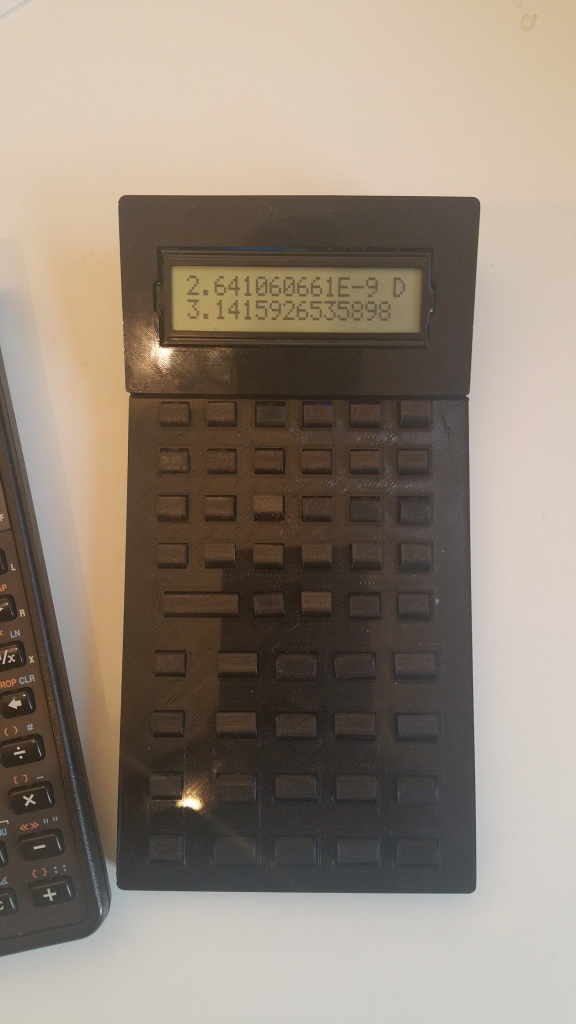

It all started in 2016 with a fun little project I named NerdCalc. As I am a huge fan of calculators made by Hewlett Packard (HP), I wanted to create my own version of an HP calculator. What I had in my mind was:

Create the cheapest possible programmable HP-style calculator

I was thinking of a mixture between two of my favorite calculators: HP-34C and HP-48SX. From the HP-34C, I wanted to take over the set of functions, the easy (or simple) programming capabilities, the capability to run on rechargable batteries. And from the HP-48SX I borrowed the ideas for a big keyboard with 49 keys and a multi-line LCD display.All should be packaged in a self-designed, 3D-printed case, black, with black 3D-printed keys with no labels or other visual distinction: a calculator either for professionals, who know what function is on which key – or for nerds, hence the name NerdCalc.

As a processor, my choice was a cheap Arduino Pro Mini compatible development board, which has an Atmel AVR 328P microprocessor on it with 32KB of programmable flash memory, 2KB of RAM and 1KB of EEPROM. After all the hardware was designed, soldered together, 3D-printed, assembled, and tested, it was time to program that little beauty to emulate a HP calculator.

I created a litlle test program to read the keys and interpret the keys for simple basic maths, i.e. for ading +, subtracting +, multiplying * and dividing / two numbers – of course with double arithmetic. And I was quite frustrated to notice that double == float on the AVR. I did not need full accuracy of 64-bit doubles, but of course a HP calculator supports at least 10 visible digits (normally 12 digits internally) and an exponent range from 10^-99 to 10^99. So stepping down to 7 digits accuracy and an exponent range to 10^+/-38 is definitely not an option.

“The internet is your friend”, I thought and after some minutes, I found that indeed there was a library available to support 64-bit floating point numbers. I was hidden in a German forum on microprocessors www.mikrocontroller.net in this thread. But other people used it, so it seems to work, so let’s go ahead, download it, integrate it into my NerdCalc, and tada: it really worked also on my small calculator.

And the first version with the basic mathematics only used 13960 Bytes (45%) of flash space and 481 Bytes (23%) of ram. This looked very promising.

So I started to add functionalities. The next version already had all the trigonometric functions (sin, cos, tan) and their inverse function (asin, acos, atan) in three angle modes (degrees, radian and grad), logarithmic and power functions (ln, log10, e^x, 10^x, x^y, 1/x, x^2, sqrt) on board. Not all the functions of an HP-34C, but the important 80%. Both, flash memory and data memory, were rapidly filling up: 27478 Bytes (89%) and 946 Bytes (46%).

And, after adding some simple stack operations like push and pop, the statistics functions finally blew it up: 32088 Bytes (104%) of program memory!

2. Starting with Assembler

So, I started to check where and how to save memory. And I soon fouund out, that GCC even in space optimizing mode (-Os) does have some problems in handling 64-bit data. For example, the simple one liner to reset the statistics registers to 0

- case CLSTA: sx=sx2=sn=float64_NUMBER_PLUS_ZERO; break;

was compiled to

-

* case CLSTA: sx=sx2=sn=float64_NUMBER_PLUS_ZERO; break;

* 6732: 10 92 1c 04 sts 0x041C, r1 ; 0x80041c <sn>

* 6736: 10 92 1d 04 sts 0x041D, r1 ; 0x80041d <sn+0x1>

* ...

* 674e: 10 92 23 04 sts 0x0423, r1 ; 0x800423 <sn+0x7>

* 6752: 10 92 14 04 sts 0x0414, r1 ; 0x800414 <sx2>

* 6756: 10 92 15 04 sts 0x0415, r1 ; 0x800415 <sx2+0x1>

* ...

* 676e: 10 92 1b 04 sts 0x041B, r1 ; 0x80041b <sx2+0x7>

* 6772: 10 92 0c 04 sts 0x040C, r1 ; 0x80040c <sx>

* 6776: 10 92 0d 04 sts 0x040D, r1 ; 0x80040d <sx+0x1>

* ...

* 678e: 10 92 13 04 sts 0x0413, r1 ; 0x800413 <sx+0x7>

* 6792: a3 c1 rjmp .+838 ; 0x6ada <main+0x1d7e>

taking up 96 bytes, excluding the final rjmp at the end. By using gcc inline assembly, I rewrote that to a much simpler code, that just needed 14 bytes:

-

* 675e: ac e1 ldi r26, 0x1C ; 28

* 6760: b4 e0 ldi r27, 0x04 ; 4

* 6762: 98 e1 ldi r25, 0x18 ; 24

* 6764: 88 27 eor r24, r24

* 6766: 8d 93 st X+, r24

* 6768: 9a 95 dec r25

* 676a: e9 f7 brne .-6 ; 0x6766 <main+0x1a0a>

* 676c: bb c1 rjmp .+886 ; 0x6ae4 <main+0x1d88>

Using that technique, I was able to reduce memory consumption to some extent. But also combining assembly with other techniques, like using internal knowlegde of how the avr_f64 functions works, using functions pointers, getting rid of all C++ classes like, using low level C functions instead, the challenge remained that I could not fit all needed functionality into 32KB. Especially conversion to String and the trigonometric functions use serveral KB of precious memory. So, in the end it was clear to me: either I stop the idea of developing my own HW calculator on the Atmel AVR platform or I do rewrite at least some routines in assembly language.

3. The first routine: fp64_mul

September 2017 I started hard core assembly implementation of the first routines. A first analysis of avr_f64 showed, that multiplication itself is already quite memory intensive: the core routine f_mult needs 0x3fa = 1018 bytes. And multiplication is very easy to implement, as the Atmel AVR 328P already has a 8 bit x 8 bit multiplication instruction. And multiplication of IEEE 754 floating point is basically just multiplying the significand parts and adding the exponents.

However, before that can be done, some unpacking is needed as binary IEEE 754 standard for 64-bit floating points does have some internal coding, like the hidden significand bit that is always 1 for normal numbers and always 0 for subnormal numbers. The avr_f64 library included a routine for unpacking, f_split64(). But this routine does not support subnormal numbers, which I wanted to include in my library as it helps for some algorithms to provide more numerical stability.

Furthermore, also at the end routines are needed to round the result and some helper routines, like shifting until the topmost bit is set. With that it was clear, that some structure is needed and replacing some routines will not be an easy task but needs a clear structure and apporach. So, I took a look at the naming conventions and structure of the standard library libc, published on http://www.nongnu.org/avr-libc . Having studies the 32-bit float implementation, it was clear for me to re-use the naming structure and their overall structure. It was also clear for me, that I have to implement a significand subset of these library routines. Yes, it’s 78 files, but a lot of file scontain only small routines, so it should be no big problem to extend these to 64 bits – a clear under-estimation of the task that was laying ahead of me.

Implementing multiplication was indeed a walk in the park, including handling of special cases. And the results were very promising: Code size was reduced from 1018 bytes to 532 bytes, and execution time was cut from 274,340 microseconds to 32,876 microseconds for 1000 calculations. A saving of 48% of memory and 88% of time – I did not expect that, especially as the implementation was following the IEEE 754 standard more closely, i.e. by supporting subnormals. This win-win situation, saving both memory and execution time, was one of the key motivations that kept me going.

4. Next level: fp64_add, fp64_sub

After that first success, tackling addition and subtraction was the next logical move. These operation require some more thoughts as ading two numbers requires shifting them in place. Also, a lot more special cases have to be handled and handled differently to multiplication. E.g. multiplying 2 infinite numbers A and B is easy, the result is always INF and the sign of the result is the multiplication of the signs of A and B, so +INF * -INF = -INF. But for addition, +INF + -INF is not defined, but +INF + +INF is. In the end, 19 different cases had to be taken care of.

With that, the overall structure was created for nearly all routines:

Copyright information

Handling of Nan & Inf (*)

Main Entry

Unpacking operands

Check NaN/Inf/0 special cases

Check & handle other special cases

Handle default cases

Round and pack result

Subroutines

(*) The code for Handling Nan & Inf is placed before the main entry to take advantage of the brxx instructions with a negative displacement. This made the overall code smaller.

Another topic that had to be solved with addition was proper rounding. After studying several papers and books, it was clear, that only one rounding mode will be implemented: round to nearest, ties to even. And with the exception of multiplication, which used 72 bits interim precision, all other routines used 56 bits, so 3 bits of additional precision.

After all the different cases had been properly sorted out, including the decision to not got for additional precision for intermediate results beside the 3 bits, implementation was much easier as I had not to take care on any additional registers. Everything just worked on the registers of the operands.

5. Tough stuff: fp64_div, fp64_fmod

Implementing division on the high level seems to be quite easy: just a little bit of subtraction and shifting. Also the special case handling is similar simple to multiplication. So, the first implementation was quite easyly done. However, the problem was to implement it in a way that does not use much code space. And the first version was just full of MOVs, ADDs and ROLs. Also, the initial algorithm did not work for subnormal numbers. So much tweaking, testing and re-testing was necessary to come to the final algorithm.

Even more challenging was fmod, the remainder function. I did not want to go for the “no brain” solution: fmod = x – trunc(x/y)*y, as some accuracy would be lost. The 32-bit floating point implementation was a good starting point, but I modified it and put the core into an internal function that could be called from other routines to return also trunc(x/y), as long as this is less than 2^32.

6. Trigonometric hell

With +, -, *, / and % being implemented, the foundation was laid to tackle the more complex functions. And definitely, one of the “monsters” (regarding code size) were the trigonometric functions.

I first implemented some helper functions to evaluate power series. My first idea was to extend the little polynom 32-bit floats were using to 56-bit. However, a few tests reveiled, that accuracy and convergence were not pretty good. So I took a deeper look into the implementation of avr_f64. Here, rational approximation was used, but with some code I did not understand at that time. It turned out, that a kind of fixed point math was used for a power series.

So, I studied quite some articles and books on approximating trigonomatric functions. I learned all about the power and the drawbacks of power series, and I was soon drawn to the CORDIC algorithm. For me, CORDIC had and has convincing arguments:

- precision does not vary with the argument x

- every iteration will increase precision by 1 bit, guaranteed

- every iteration only involved adding and shifting, two things the Atmel AVR does support

- with guaranteed precision and simple operations comes guranteed timing, independant of the argument x

- calculates sine and cosine at the same time, so no overhead for cosine and tangent is derived by simple division of sine / cosine

- same algorithm, just “driven backwards”, can be used to calculate the inverse functions, arcus sine and arcus cosine

- it was used also in HP calculators

However, implementing CORDIC threw up a lot of problems. First was to find a simple reference implementation: the first dozens of search results all revealed reference implementation that were using double arithmetic. Finding an implementation that just used integer math was a challenge, finally I found one of the University of Texas, which itself was based on code of Dr. Dobbs Journal of 1990.

After adopting the algorithm , the next challenge was create a table of constants. CORDIC relies on a table of atan values, from atan(1/2) to atan(1/2^53) – if you go for 53 bits of precision. So I created a small C program, running on a Linux machine, that computed these values using long doubles. However, starting at 2^-35 the standard math.h routines did no longer compute atan(x), but just returned x. So, I rewrote the code, using the quadmath library (128-bit precision), and got all my constants down to atan(2^-53).

But the next challenge was already waiting: The Atmel AVR microprocessor has 32 registers. Sounds great, but not when you are handling 64-bit/8-byte-values. The core of the algorithms definitely needs 5 64-bit-values, one 8-bit counter and at least one scratch register; all in all 42 registers. And every value is used several times per iteration. I rewrote the algorithm several times to find a sequence that used as little instructions as possible.

By using only integer manipulations, the internal CORDIC algorithm basically operates on a 64-bit fixed point format, using twos complement for negative numbers, 2 digits before the implicit decimal point and 62 digits after the decimal point. Of course, this format has to be converted to IEEE 754 for input and output which was a smaller problem, but still some work.

And then testing of any algorithms that performs iterations was not onlöy a challenge, but a hell – basically due to the design principle that everything will be created and tested using the Arduino IDE. All the algorithms before could be tested quite easily by checking at various points the contents of the two arguments/working registers A and B. But now, at least 3 of the values have to be traced – per iteration! So, a new set of debug routines with a circular buffer were created where every iteration could be traced.

And last but not least: adding the inverse function required some refactoring of the code to bring down the code size and to re-use existing code parts – by the price that some of the optimizations introduced errors in the already existing and tested routines for sine and cosine, which had to be fixed and then re-tested and re-tested and re-tested.

7. Easy after trigonometrics: fp64_log, fp64_exp

logarithm could also be implmented using the CORDIC algorithms – which was now fully tested and working. However, it would require another table of 2*53 constants, which would take up over 800 bytes. As approximation via power/Taylor series would only require 17 constants, needing only 136 bytes, decision was clear to go for a straigtforward power series implementation, using the functions I prepared for sine and cosine, but never used them.

After comparing the output with the reference implementation of avr_f64, the table could be reduced by one entry to 128 bytes.

The exponential function also was implemented using a power series with 17 constants. So, even with the additional code, implementation with Taylor series was much more space efficient that re-using the CORDIC algorithm.

8. Harder than trigonometry: conversion to decimal

On the way, a lot of small routines were implemented like conversion to/from double, comparing two numbers or checking numbers for special values. So, most of what avr_f64 offered was more or less implemented. But one major topic was still missing: conversion to and from decimal. All my testing always relied on the atof- and ftoa-like functions of avr_f64, which was correct, except for subnormals.

The algorithm used in avr_f64 for conversion to decimal qas quite complex, taking serveral iterations to come to the right precision – and still did not output the “correct” number for some values. For example 100.01 can not be represented exactly in binary:

((float64_t)0x4059066666666666LLU) = 100.09999999999999

This is similar to the problem of representing 2/3 exactly in our decimal system. There are a number of algorithms out there, the so called “dragon” algorithms, which deal with the problem of converting “correctly” binary numbers to decimal numbers. After having studied these algorithms, I decided not to go for them for four reasons:

- 32-bit floating point library does not implement them

- avf_f64 does not implement them

- they will take up additional code space

- the problem “disappears” if you do not use full precision

The logic behind argument 4 is: On a microprocessor, you will use conversion to decimal only when you want to interact with some human. Usually, having 10-12 digits of precision in the output(!) is sufficient. As soon as you start to decrease the number of digits, the number will be rounded correctly. So, internally, you will keep the full precision, but for the user, the problem is not visible.

However, that decision was a hard one, as it violates one of the basic assumptions human have: printing out a number and reading it back it should reveal the same number, i.e. everyone assumes that atof(ftoa(0.1)) will return 0.1. This will be not the case in the current version of the library.

But, even with that, the conversion was quite challenging, as it involved getting the logarithm of base 10 of the number and getting powers of 10. Using the log and exp functions above was not an option as that would draw in about 500 byte. So, special routines were created, using 64-bit integers for powers of 10 and a nice approximation routine for log10.

9. Go for full library

More or less, after 18 months, feature equality with avf_f64 was achieved. So, I could stop there and continue to implement NerdCalc. But it was by that time absolutely clear to me, that some essential routines (like round) were missing. So, I checked the 32-bit floating point library, and for each and every routine that was present there, I created a 64-bit equivalent.

And as addon, I included include headers for math.h and avf_f64 compatibility, added more and more documentation, and finally in August 2019, I could release everything as release 1.0.0.

10. Rewriting for accuracy

As I announced the publication of the library also on microcontroller.net, it drew some attention. And the first users detected that in some case the trigonometric functions did have some problems with accuracy, especially in edge cases and when going beyond the usual range of -2*Pi to +2*Pi. But I got also very useful hints on how to improve the performance.

In the end, I threw away all my CORDIC code for the trigonometric routines and replaced it with approximation by power series with carefully selected parameters. To avoid cancellation of significant digits when reducing arguments to the range of 2*PI, I had to blow up accuracy and introduced functions working on 96bits, 80bits of mantissa and 16 bits of exponent. These functions used up the complete register set of the Arduino!

Also for exponential and logarithm functions, different algorithms were chosen to bring up accuracy.

In the end, I refactored the essential routines 2-3 times to scale up accuracy. Refactoring is relatively easy in C/C++, I’ve done this several times. You “only” have to be careful that you touch the right parts. usually, the algorithm is not the problem. Also in Assembly, the algorithm itself is also not the problem, but register allocation and order of execution. They both interact very closely, with the wrong register allocation you end up either with messy code or with a lot of push & pops. And if you are not careful with your order of execution and blow up your code, you will also get spagetti code as all the jump targets for conditional jumps are beyond the 64-byte reach limit. Sometimes I spent several weekends on thinking on register allocation and order of execution, before I refactored a routine.

11. Refactoring for space

The accuracy was now on an acceptable level, in most cases the result was precise to 52-53 bits (out of 53 bits in the data format). But now the code size was increased, as a lot of MOVs and shifts were introduced all over the code. In several big waves, I identified these patterns – sometime with the help of some linux scripts, sometime manually – and moved these into subroutines. Of course this sacrified space of execution time, but the overhead was necletable, as the routines were anyhow still 80+% faster than their counterparts in C. And memory is more scarce than time in Microcomputers.

This resulted in significant memory savings and also better routines were you could see much easier how the algorithm works.

12. The final success: NerdCalc revisited

Of course, after the library was completed, I had to revisit NerdCalc. I migrated the source-code to fp64lib, which was basically renaming all calls to functions starting with f_ to fp64_. With avr_f64, my last version where I stopped development of NerdCacl, needed 31282 Bytes (101%) of program memory – just not fitting into flash memory – and 776 Bytes (37%) for data. The fp64lib version needed only 14272 Bytes (46%) of flash, data space remained untouched. So, flash memory was reduced by 54%, a huge success.

As fp64lib comes with some functions needed for the calculator, but not present in avr_f64 (like square), I could replace also my C functions with the calls to the native fp64lib functions, saving another 56 bytes.

Much more important for me was that before I could remove the restrictions, i.e.switching on

- full 2-dimensional statistic functions,

- gamma function

- ENG display mode

- GRAD angle mode

Even with all these, less than half of the memory was used, enabling me to continue in adding the missing functionality. Now after more than 2 years, I can finish my little fun project NerdCalc -ready for any new obstacles ahead!